Within the context of our seminar „Writing Across Languages: The Post-Monolingual Anglophone Novel“, our task was to test, how the software „Jupyter Notebook“, equipped with an English database, classified foreign words in a novel that is mostly written in English. The relevant categories were parts of speech and dependency tags. As „Jupyter Notebook“ was too tiresome to install, we worked with a copy of a Jupyter notebook in Google Collab instead. We had two example sentences which we could use in order to become acquainted with the software. Our main job was to read our novel and to note down examples of multilingual text passages, so that they could be annotated by the software.

This preparation for these sentences to be annotated by the software posed a few problems for me. My first problem was that my book, „Babel“ by R.F. Kuang, uses a lot of Chinese words and these words are sometimes presented as a Chinese character. I had some problems with copying the Chinese characters. The problem was not so much what the character meant or to what it translated in English, as this was indicated most of the time, but I had no idea on how to copy the Chinese characters, especially as the Kindle app does not allow copying words or phrases from the app. My initial idea was to enter the romanized version of the character or its meaning in English into the Google translator and to then just copy the Chinese character from there. However, this didn’t work because it already said in the book that the usage of this character for this meaning was quite unusual and the Google translator only indicates one possible way of writing a Chinese word as a Chinese character. My second idea was to take a photo, to copy the Chinese character from there and to then paste it into my document. This also didn’t work because I couldn’t copy the characters in the apps that I have tested either. After some unsuccessful tries with apps in which the user can draw the Chinese character and during which the Chinese characters could not be recognized by these apps, I ended up on this website: https://www.qhanzi.com/index.html. This website also allows the user to draw the Chinese character and then guesses what Chinese character you drew, but it seems to have a much larger database than the apps I tested. Here is an example:

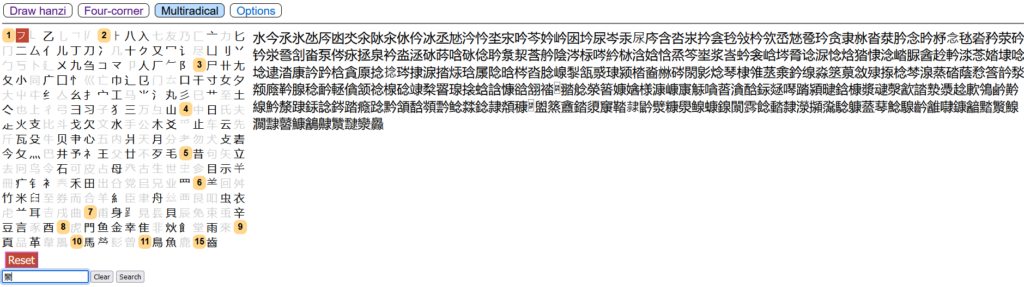

In this example, I wanted to draw the first character in the suggestions below. In the cases of multiradical characters, meaning Chinese characters which consist of more than one radical, I had to choose the option „Multiradical and then chose characters from a large list. Here is also an example:

These two methods take a lot of time, of course, but in the end, I managed to find all the characters that I needed.

My second, more minor problem while copying the text from the app into my document were the horizontal lines above vowels in romanized Chinese writing and also in Latin. In my research, I learned that in Latin, these lines show the stress of a particular vowel. One way or another, I knew that I had to indicate these lines above a vowel somehow. In the end, I found a Q&A page onn which a user indicated how to type these lines above vowels on the keyboard. This is the page I used: https://www.gutefrage.net/frage/lateinischer-betonungsstrich-word. Just like the website with the Chinese characters, this isn’t an academic website, but for my purpose, it sufficed.

Annotating the training sentences

As I mentioned earlier, I first went through the example sentences. While going through each of them individually, I will name a few mistakes that the software made along with some correct annotations which are decidedly fewer. The mistakes that I name are usually first about words that are foreign to the English language, and then, if there are any, mistakes which were made concerning words which belong to the English language. Concerning the correct annotations, I only mention those words which are non-English, as it should be the norm that English words are annotated correctly, considering that the data with which the software was trained, was written in English. The first training sentence was:

Tías called me blanca, palida, clarita del huevo and never let me bathe in the sun. While Leandro was tostadito, quemadito como un frijol, I was pale.

Lickorish Quinn 129

The mistakes that the software made which I recognized were that “palida” was categorized as a conjugation (correct: adjective) and that “frijol” was classified as an attribute. Concerning the English words, the only mistake that I recognized was that „let“ was classified as a conjunction (correct: auxiliary). Some correct decisions that the software made were that “Tías” was classified as a nominal subject, that “blanca” was classified as an object predicate, that “clarita del” was classified as a compound, but that huevo was classified as a conjunction and that “tostadito” was classified as an adjectival modifier.

The second training sentence which was annotated was:

In Chinese, it is the same word ‘家’ (jia) for ‘home’ and ‘family’ and sometimes including ‘house’. To us, family is same thing as house, and this house is their only home too. ‘家’, a roof on top, then some legs and arms inside.

Guo 125-26

The mistakes here were the following: the first “家” was classified as an appositional modifier, but also as a noun (which is correct), the second “家” was classified as an unclassified dependent and thus radically differs from the first annotation, and “jia”, which is the romanized version of the Chinese character “家”, was categorized as a proper noun (correct: noun) and an appositional modifier. Concerning the English words, there were also a few mistakes: “family” was considered a conjunction (correct: noun), “is” was classified as a conjunction (correct: auxiliary), “legs” was classified as a noun (which is correct) and as a root and “arms” was classified as a conjunction (correct: noun).

Annotating the quotes from „Babel“

As I was curious, how the software would react to sentences with Latin words, I started with a fairly easy one:

But in Latin, malum means “bad” and mālum,’ he wrote the words out for Robin, emphasizing the macron with force, ‘means “apple”.

Kuang 25

The mistakes in the annotation were that the first “malum” was categorized as a noun (correct: adjective) and a nominal subject (correct: adjectival subject) and that the second „mālum“ was classified as a proper noun (correct: noun) and a conjunction (correct: subject). The English words in this sentence, however, were categorized correctly. To me, this shows that the software does not understand the sentence because otherwise, it would have recognized that „malum“ means „bad“ and is thus an adjective and that „mālum“ means „apple“ and is thus a noun.

Okay, a sentence with Latin was not annotated successfully. Let’s see whether a Chinese word is better. An example would be:

Wúxíng – in Chinese, ‘formless, shapeless, incorporeal’. The closest English translation was ‘invisible’.

Kuang 65

Nope, the annotation to this sentence was even worse. First of all, “Wúxíng” was classified as a proper noun (correct: noun) and as a root (which could be correct, as there is no main verb which could be the root of the sentence). Furthermore, there are a few English words which were not annotated correctly: “Chinese” was classified as a proper noun (correct: noun) and “formless” was classified as an adjectival modifier, while “shapeless” was classified as an adverbial modifier (correct: probably adjectival modifier).

Now, I was invested and wanted to get to the bottom of this weird annotation of foreign words by the Jupyter Notebook. That’s why I chose this as my third sentence from „Babel“:

Que siempre la lengua fue compañera del imperio; y de tal manera lo siguió, que junta mente començaron, crecieron y florecieron, y después junta fue la caida de entrambos.

Kuang 3

Honestly, I expected this sentence to be full of either classifications as proper nouns or full of mistakes. The mistakes in this sentence concerning the foreign words were that “crecieron” and “florecieron” were classified as nouns (correct: verbs), “de” was classified as unknown (correct: preposition) and that the rest of the words were classified as proper nouns. Concerning the clausal level, the words were either classified as compounds or as appositional modifiers. Interestingly, the software correctly recognized “la” as a determiner and “imperio” as a direct object. I don’t know whether the software was just lucky with these two annotations or whether the place of the words in these sentences somehow influenced the correct annotation. One or way or the other, it is clear that this software cannot annotate words in a language other than English very consistantly.

As I recognized that it made little sense to enter entire sentences in another language into the software, I wanted to make the work for the software as easy as possible. Thus, I returned to Chinese and entered the following sentences next:

He will learn. Tā huì xué. Three words in both English and Chinese. In Latin, it takes only one. Disce.

Kuang 26

I was hoping that the software would recognize that the first two sentences were a translation from each other and that they thus would be categorized correctly. I was disappointed because in English, the words “He will learn“ were correctly classified as a pronoun, an auxiliary and as a verb, while in Chinese, “Tā huì xué” were classified as a noun, a noun and a verb, which is, based on the English translation (as I don’t speak Chinese) is not correct. Apart from that, “English”, “Latin” and, unsurprisingly, “Disce” were categorized as proper nouns, while actually, „English“, and „Latin“ are nouns, while „Disce“ is a verb. One further mistake consisted in the software annotating „Chinese“ as a conjunction (correct: noun). The different annotations of one and the same sentence in different languages confirms my assumption that the software does not actually understand the words that it annotates.

Okay, Chinese didn’t work. I was curious whether another language which is closer to English would help. So, I chose two sentences in French from Babel:

‘Ce sont des idiots,’ she said to Letty. / ‘Je suis tout à fait d’accord,’ Letty murmured back.

Kuang 71

The result from this annotation was also disappointing. “Ce” was classified as a verb (correct: determiner), “sont” (correct: verb) and “des” (correct: article) as an adjective, “Je” (personal pronoun) and “tout à fait d’accord” were classified as proper nouns, “ce” was classified as an adverbial clause, “sont” and “des” was classified as adjectival modifiers, “suis” was classified as a clausal complement, and “à fait” was classified as a compound, while “tout” and “d’accord” were classified as a noun phrase as adverbial modifier and as a direct object, respectively. Interestingly, the words “idiots” as a noun and as a direct object and “suis” as a verb were classified correctly.

Okay, a closer language like French didn’t work. Maybe German could be a better help for the software to annotate the foreign word correctly. That’s the reason why I chose this sentence:

But heimlich means more than just secrets.

Kuang 81

I figured that, as “heimlich”, or rather, its negative counterpart “unheimlich”, is often used in the context of horror literature, maybe the software would be able to recognize this word and thus annotate it correctly. However, I was, again, disappointed, as “Heimlich” was classified as a noun (correct: adjective) and a nominal subject (correct: adjectival subject).

Next, I was again intrigued by the Chinese language and I wanted to know whether the results of the Chinese character and its translation in the training sentence above were just a coincidence. So, I chose the following sentence, which is similar to the training sentence, with a Chinese character:

Why was the character for ‘woman’ – 女 – also the radical used in the character for ‘slavery’? In the character for ‘good’?

Kuang 110

The answer was: no, the results from the training sentence were not a coincidence: “女” was classified as unidentified (correct: noun) and as an appositional modifier. Furthermore, an English word was also categorized incorrectly: “radical” was wrongly classified as an adjective (correct: noun). The classification of „In“ as the root could be correct, as the sentence „In the character for ‚good‘, there is no verb which could be the root of the sentence.

Okay, now, Chinese was for me out of the question. Maybe the French and German words were not established enough in the English language. So, I decided to choose a sentence with a French word which I have already heard to have been used in other English sentences:

‘It’s not the company, it’s the ennui,’ he was saying.

Kuang 144

Well, “ennui” was, indeed, correctly classified as a noun and as an attribute. However, the particle “’s” was classified as a clausal complement. But nevermind, we’re making progress concerning the annotation of the non-English words.

Next, I was interested in how the software would handle Greek words. As an example sentence, I chose:

The Greek kárabos has a number of different meanings including “boat”, “crab”, or “beetle”.

Kuang 156

Just like the sentence before, the foreign word was classified correctly, in this context as a noun and as a nominal subject. However, now the software seemed confused about the classification of the English words: “including” was considered a preposition, but also to be a verb, “crab” was considered as a noun, but also as a conjunction, while “beetle” was considered to be a verb (correct: noun) and a conjunction.

Okay, nice, the Greek word was also no problem for the software. As I am a native German speaker, I wanted to give a second chance to the annotation of a German word in my last example. I chose this as an example sentence:

‘The Germans have this lovely word, Sitzfleisch,’ Professor Playfair said pleasantly when Ramy protested that they had over forty hours of reading a week.

Kuang 168

Concerning the German word “Sitzfleisch”, I was disappointed because the software classified it as a proper noun (correct: noun) and as an appositional modifier. Concerning the English words, there were also some mistakes: “Professor” was classified as a proper noun (correct: noun) and a compound, while “Playfair” was classified as a nominal subject, “have” was classified as a clausal complement, “when” was classified as an adverbial modifier and “protested” was classified as an adverbial clause modifier.

A more general problem that I encountered while annotating these sentences was that some tags like “oprd” were neither mentioned in the table we received in order to recognize the clauses and the parts of speech, nor were they mentioned in the spaCy article. Instead, I found this website, which helped me with the abbreviations: https://stackoverflow.com/questions/40288323/what-do-spacys-part-of-speech-and-dependency-tags-mean.

Another, very minor technical problem concerned the work with Google Collabs. The program sometimes gave me feedback that I had opened too many notebooks that were not closed yet although I had closed them. To solve this problem, however, I simply had to click “Close all notebooks except the current” or something like that and then I could continue annotating.

On a more positive note, the software consistently succeeded in the indexing of the tokens and in classifying punctuation marks as such. The only exception that I found was the apostrophe in „’s“.

Comprehension questions

I don’t really think that I still have any comprehension questions, I am just not quite sure whether I correctly assessed the parts of speech and the dependency tags because the linguistics class in which I learned these terms was quite a long time ago in about 2017. That is also the reason why I mostly didn’t indicate the correct dependency tags if they were obviously wrong. I googled or try to look up the abbreviations and what they meant, of course, but I am still not quite sure whether there aren’t still some mistakes that I made in this regard. That’s why I also didn’t check whether the tree at the bottom of the interface was correct. There could be some answers as to why the software classifies some words the way it does, but right now, I didn’t see a systematic wrong approach to foreign words except that they are often classified as proper nouns and as compounds. I was also not quite sure how to write this blog entry as I haven’t written a blog entry yet and I am not that into blogs myself.