Before taking this course, I already had previous experience with video editing software, including subtitling and working with timelines. However, I had never worked with DaVinci Resolve, which meant relearning the basics, and I was trying to subtitle a video … Continue reading

Tag Archives: video editing

Final Session (Summer Term 2023)

During our last session on July 13, 2023 each student group presented the final results of the projects we have done during the course of this semester. It was a great way of getting a better understanding of the individual folktales besides the ones we were working on ourselves. Together we took a look at the different video editing and coding experiences as well as talked about our individual difficulties during the process.

Coding

In the beginning of this semester most of the students, myself included, struggled with the coding part of this class. The most common mistakes were such as:

- forgetting to close the tags

- changing the geographic coordinates in the header

- Finding quotes in the text so it can be properly coded

- Finding all words for the notes and glossary

- not using the <q> tag

At least for me it was a foreign experience and way beyond my “academic comfort zone”. Nonetheless it was an experience that greatly benefitted me in the end.

Video Editing

This class wasn’t the first time I used video editing programs but I never worked with DaVinci Resolve before. Since I missed both tutorial sessions I had to figure the works out by myself but thankfully our instructors provided us with a detailed step by step guide. Nonetheless there were a few things that really proved difficult in the beginning:

- locking the subtitles and setting them at the right place (the timestamps were sometimes confusing)

- adding a title page without shifting any of the subtitle, audio or video tracks

- inserting the credits at the end

These were all things that most of the students struggled with and together we came up with helpful suggestions how to solve any of the before mentioned problems, e. g. the use of additional editing programs or to create the videos in multiple steps to avoid the shifting of the subtitles. In the end most of us felt confident in using DaVinci Resolve again with considerably less effort.

Results

At the end of our last session we talked about the class in general and gave feedback on our individual experiences and accomplishments. Personally I am really glad that I had the chance to participate since I gained a lot of new skills and insight into the Konkomba culture.

Presenting our Videos

In this week’s class we had our group presentations on the videos we had to edit and subtitle at home. We looked at what we have done so far and the problems we have had during the process of subtitling and the editing with the program Da Vinci Resolve.

The biggest problems that were mentioned had to do with the title, the timestamps and the software.

Also, as a community, we made some changes and discussed improvements to be made.

Issues:

Title -> One person only had an audio about the telling of the folktale and not a video. She had a black screen at first but then figured it out and used a lot of pictures matching the audio. She encountered problems when putting the title before the audio so the subtitles were already shown in the title.

We also used the English titles of the folktales rather than the original ones because we decided to use those in the class before.

Timestamps -> The timestamps are displayed in seconds and milliseconds and this made it difficult for some of us to convert them directly into Da Vinci Resolve since it happened that the video shifted in the program because of the title. That is why some had to listen to the presenting voices (even without understanding Likpakpaln) to fit the subtitles to the spoken. Others put the subtitles first and added the title afterwards but that also caused some videos to shift. Some timestamps also were not that accurate so people went with their gut and listened to some key words. Therefore the question arose if we should rather focus on the exact timestamps or the characters per second.

Software -> For some it was difficult getting used to the software. One person did not know how to cut the video in the end before the credits but she received help and fixed it. We also noticed that after we finished editing the video quality got worse, probably because of the adjusted frame rate.

One folktale had a song and the editor changed the subtitles to another color to make it more clear when they were singing. The storyteller was also speaking very fast so he had to put the number of the characters per second down and left no gaps between the subtitles.

Additional changes / information

Other things that were mentioned were those, that the presentation on video-editing and subtitling was very helpful when problems arose.

We also decided to add “Düsseldorf – HHU“ to the credits after the “Centre of Translation Studies“ to make them more accurate.

The pyramid form when subtitling is highly preferred.

The word Ulambidaan was no translated because there was no good English translation for it. It is a common noun in Likpakpaln and it describes a psychological or medical condition, similar to a hyperactive kid that also takes joy in teasing and making fun of other people.

The word Ubor was also not changed because it means chief or political leader of the people and is very known and common.

Often folktales are being told while other community activities are happening to keep them entertained, such as cracking shells manually. The folktale and video “The Monitor Lizard nearly floors the Hyena in a wrestling match“ is a good example for the occasion where folktales are performed.

Other than that, we were told that a few things were added to our codes for our final presentation next week where we will present everything we have done throughout the semester with the help of powerpoint presentations we are preparing.

On the Subtitling of Orature

As I was unable to join today’s session, I’m going to discuss the process of video editing and subtitling which we began last week instead on focusing on the topics discussed in class.

The small amount of video editing experience I had going into this project didn’t quite prepare me for just how finicky this ended up being. We were kindly provided with translated and timestamped subtitles for our respective videos, but the editing process was much more complex than just copy-pasting the text and numbers. There are some general rules that good subtitles need to abide by in order to fulfill their purpose. Ultimately, I needed to adjust most subtitles to a certain degree to meet those requirements.

An Arduous Process

The size and letter spacing of the subtitles is something that can easily be adjusted based on intuition alone, but the same cannot be said about the two main problems I encountered:

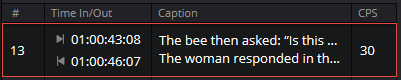

Firstly, the duration and pacing of the subtitles. One full second is generally considered to be the minimum, though this obviously only works for very short subtitles. In some cases, a storyteller may speak rather quickly, forcing the subtitles to proceed and change at a very fast rate, leading to a high amount of characters per second (CPS), which becomes hard to read. There was a section in one of the videos where I ultimately had to combine two separate subtitles into one because even just the small pause between them pushed the CPS beyond 30, which is much too fast for most people. Since subtitles are meant to promote accessibility, this obviously wouldn’t do.

Secondly, dealing with multiple speakers. In one video, there is a second person who interjects into the story with a short comment and later joins the storyteller in song. The problem was that, aside from the song, both people didn’t really speak simultaneously, making a shared subtitle for both feel strange as it would either begin too early before the person in question started speaking, or linger too long, which also just felt slightly off. As a result, I split the subtitles for the storyteller and the audience member into two separate regions that could appear and disappear separately. I also colored them differently, so that it was easier to tell at a glance who was speaking.

In conclusion:

Orature like what we have been working with in this course is meant to be performed. Making those performances accessible and understandable to people who don’t speak the language of the storyteller is an essential part in the process of demarginalization.

Working on editing and subtitling these videos has given me a new appreciation for anyone who has ever provided high-quality subtitles for any kind of media. It’s time-consuming, but ultimately very important work, not just in the context of our course.